Container Solution

Introduction

Challenges in securing the Kubernetes environment

Kubernetes is a powerful orchestration tool that manages containerized applications, enabling deployment, scaling, and management across clusters of machines.

New Application Architecture

- Microservices replace monolithic applications, making it easier to manage each service independently.

- Communication between microservices is mostly on HTTP/HTTPS protocol

- Kubernetes handles the network provisioning and orchestration of these microservices

New Deployment Model

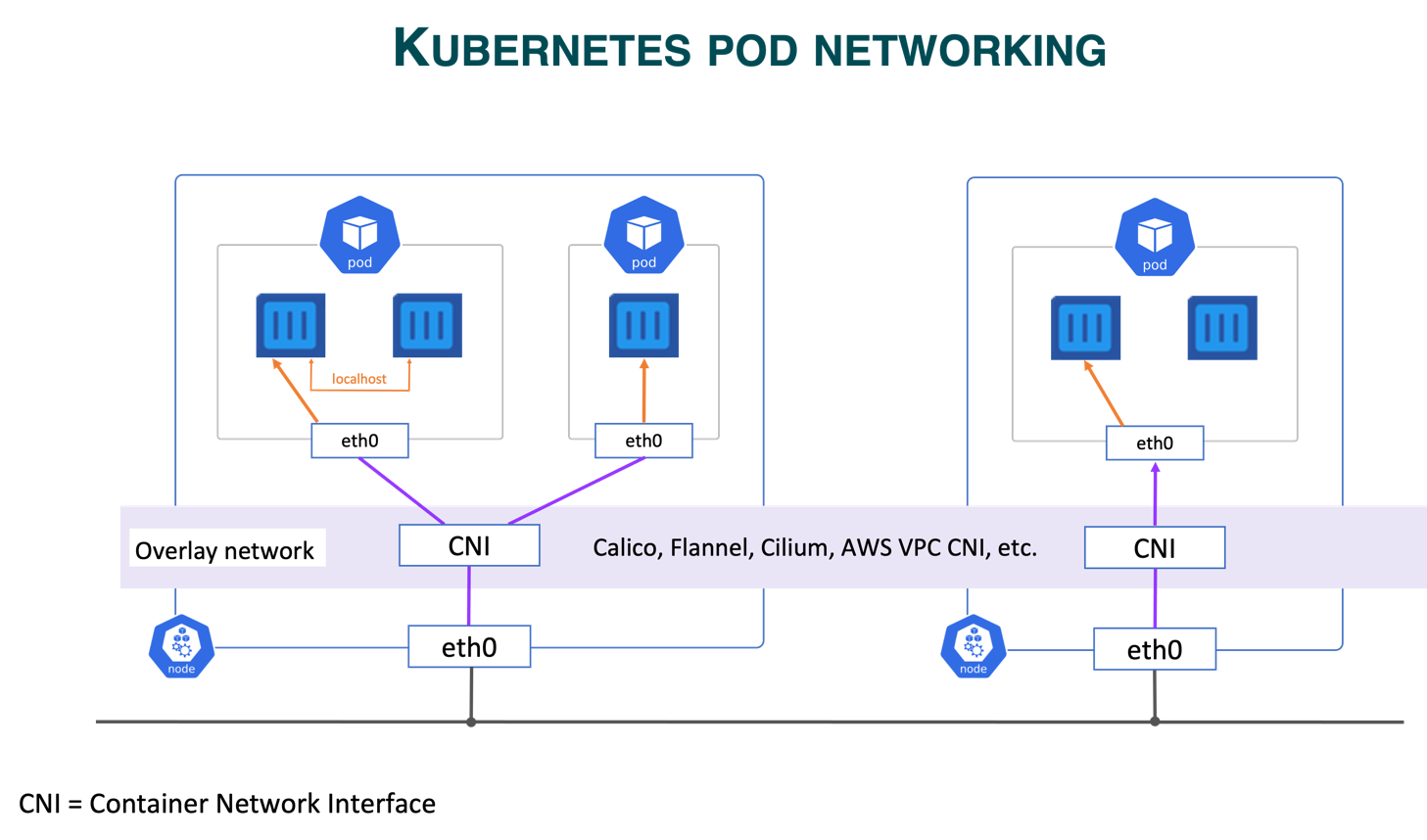

- In a Kubernetes environment, there is an underlying cluster infrastructure and an overlay architecture where the microservices are deployed

- Containers encapsulate the application, its dependencies, and libraries, ensuring consistency across different environments.

- Each pod may contain one or more containers that share storage and networking resources.

- Pods can scale dynamically across nodes, and as they do so, their IP addresses and locations within the cluster are constantly changing.

- Services abstract away the dynamic nature of Pod IP addresses, offering a stable way to communicate with Pods even if their IP is constantly changing over time

For a deeper understanding of Kubernetes infrastructure, refer to Appendix A.

Existing segmentation approaches don't address the real Attack Surface

Securing these dynamic, short-lived Microservices introduces new security challenges as existing network/CNI-based security will not effectively ensure the real attack surface of the microservices which primarily operate and communicate at the API layer. Here are some of the challenges by adapting CNI based segmentation approach -

- Limitations of Network Policies: While Kubernetes network policies allow control over pod-to-pod communication, they lack the fine-grained control needed for more complex scenarios (e.g., controlling traffic between containers within a single pod or traffic between microservices).

- Complexity & Performance overhead: As the size of a Kubernetes cluster grows (e.g., number of nodes, pods, and network policies), CNI-based solutions may experience performance degradation. The overhead of managing large sets of network policies can increase latency and affect network throughput and policy complexity.

- IP and Pod Churn: Kubernetes is dynamic, and pods are ephemeral, meaning IP addresses can frequently change. Network policies must be designed to handle this dynamic IP assignment. If policies are too rigid (e.g., IP-based), this churn can introduce vulnerabilities or cause policies to become outdated quickly. Network policies support Kubernetes, label-based IPs are recommended only when writing network policies to Kubernetes services

- Multiple CNI approaches: While Kubernetes supports multiple CNI plugins (e.g., Calico, Cilium, Flannel, Weave), network policy support and features differ across implementations. This lack of standardization can lead to inconsistent behavior, making cross-platform compatibility difficult.

- Layer 7 Visibility & Policy Enforcement: Most CNI plugins enforce network policies at Layer 3 (network layer) and Layer 4 (transport layer), restricting traffic based on IP addresses and ports. They lack native support for Layer 7 (application layer) policies, such as filtering based on URLs or HTTP methods

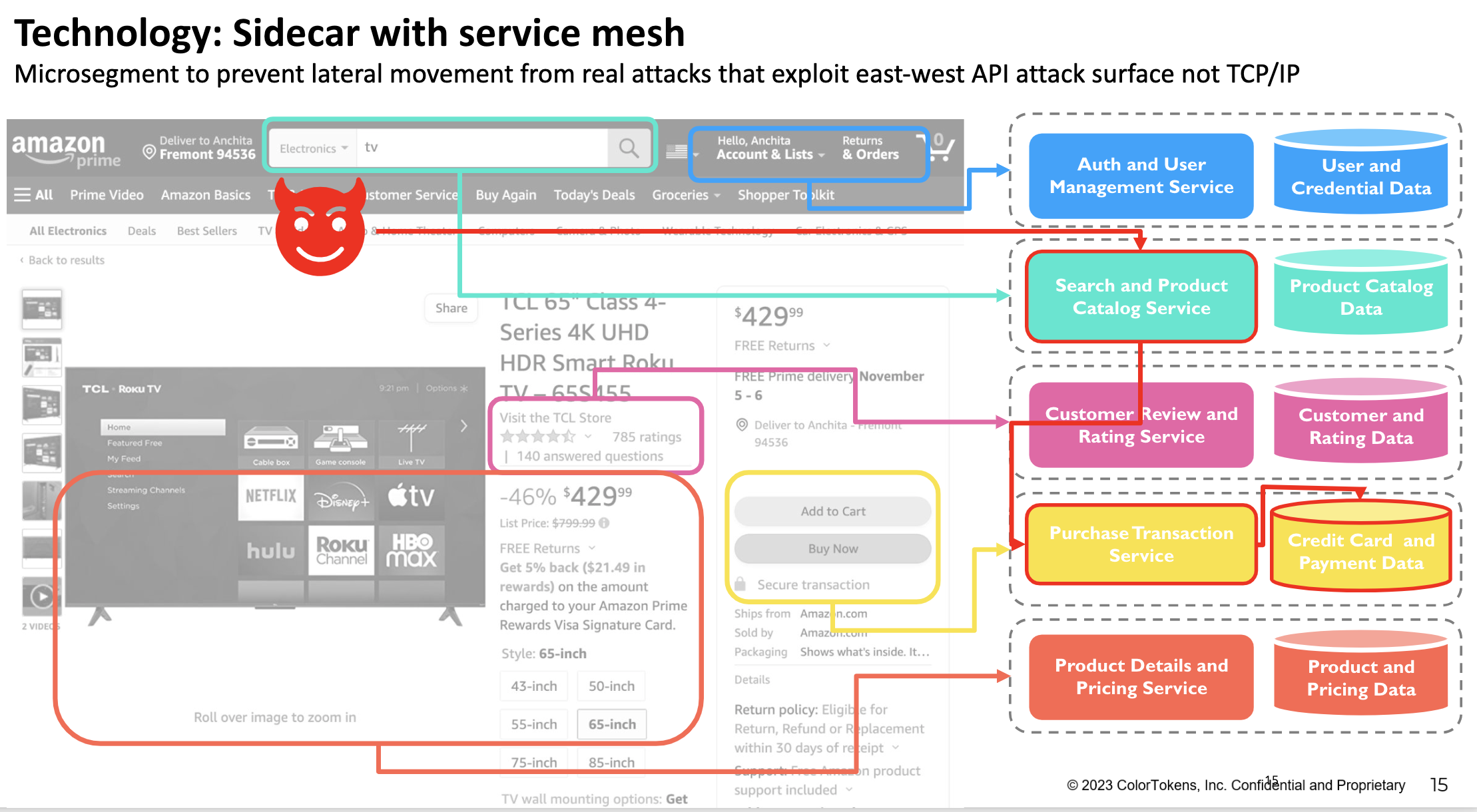

The shift to microservices architecture means that these services are managed by different teams and often shared across multiple applications, blurring traditional application boundaries. For instance, a transaction service might be used by both an online store and a cloud platform, while customer review services could be shared across various applications. With potentially hundreds of interconnected microservices communicating via HTTP or APIs, vulnerabilities in one, such as a search API, can lead to significant risks. An attacker exploiting such a vulnerability could gain unauthorized access to sensitive services and data, like credit card information, highlighting the importance of stringent security protocols in microservice ecosystems.

Securing Deployment Environments

Let us understand the use cases for securing Kubernetes environments effectively:

Securing Kubernetes Cluster Infrastructure

Microservices can be deployed in either managed or unmanaged Kubernetes environments.

Managed Kubernetes Environments

Most organizations are adapting managed Kubernetes and in this type of deployment provisioning and management and autoscaling of Kubernetes infrastructure is taken care by the cloud service providers (AKS, EKS, GKE) and user responsibility is to secure the microservices.

Unmanaged Kubernetes Environments

In unmanaged environments, the responsibility of securing underlying worker nodes falls directly on the user. This involves securing the nodes with plugins such as Container Network Interface (CNI) and integrating with tools that enhance network security.

Securing the serverless containers

They have fully managed nodes. The users do not manage the infrastructure for the pods and containers. That is managed by the respective cloud provider. Xshield can provide security to the serverless container while many other vendors do not.

Kubernetes itself supports auto-scaling to adjust resource availability but securing the orchestration plane is critical to ensure clusters are protected from attacks. Implementing role-based access control (RBAC), configuring proper network policies, and regularly patching and updating the underlying infrastructure are essential steps in maintaining a secure cluster environment.

Securing Microservices

Namespace Isolation

Kubernetes clusters often have multiple namespaces such as production and development. To ensure security, namespace isolation is vital to prevent unauthorized access and cross-communication between environments. This ensures that only authorized entities can communicate within their designated namespaces.

Application Ring Fencing

When multiple applications share the same infrastructure, it's crucial to enforce application namespace isolation. Application ring fencing helps achieve this by preventing applications from talking to each other unless explicitly allowed, thus reducing the risk of lateral movement in case of a security breach.

Securing Communications Between Microservices and Non-Kubernetes Environments

Microservices often need to interact with external systems such as PaaS services (e.g., S3, Lambda) or databases (e.g., PostgreSQL, MySQL) that reside outside the Kubernetes cluster. Securing this communication is essential to protect sensitive data. Techniques such as network segmentation, firewall rules, and encryption (e.g., using SSL/TLS) are necessary to safeguard communication between Kubernetes microservices and these external environments. For instance, when microservices access customer data from an external database, secure protocols should be implemented to this communication to ensure data integrity and confidentiality.

There have been several attempts to address these security use cases, but the existing approaches fail to provide a true zero-trust security posture. In the next section, we will explore the current approaches in the market and highlight why they fall short of achieving comprehensive zero-trust security.

Current Approaches

Several vendors have tried to solve the microservice security problem, but their solutions have significant limitations. Below are some common approaches and their challenges.

Policy Enforcement at the Kubernetes Networking Level (IPVS/IPTables)

Some vendors enforce policies at the Kubernetes networking level by controlling traffic through IPVS or IPTables. While this offers visibility and basic control, it comes with challenges:

- Dependency on IP-based Policies: This approach relies on static IP addresses, which do not align with the dynamic nature of containerized environments.

- Outdated Network Models: Solutions depend on older network models such as IPTables, which have limited scalability and performance.

- Lack of Layer 7 Visibility: Enforcing policies at the network level does not provide the deep visibility needed for API-level security.

Policy Enforcement at the Pod Network Level with CNI

Another approach, employed by vendors, is policy enforcement at the pod level using Container Network Interface (CNI) plugins. This method segments traffic within the Kubernetes cluster, but it has its own limitations:

- Dependency on Networking Plugins: The enforcement of security policies depends on the network plugins, which may not be optimized for security at the application layer.

- Lack of Layer 7 Control: Policies applied at the pod level do not extend to Layer 7, which is where API communications occur.

- Limited Observability: This approach does not provide sufficient insights into service-to-service communications at the API level.

While these approaches address certain aspects of microservice security, none of them deliver a true zero-trust architecture. In the next section, we will explore ColorTokens' approach of solving this problem such that a true zero-trust security posture is delivered to the customers.

ColorTokens Approach

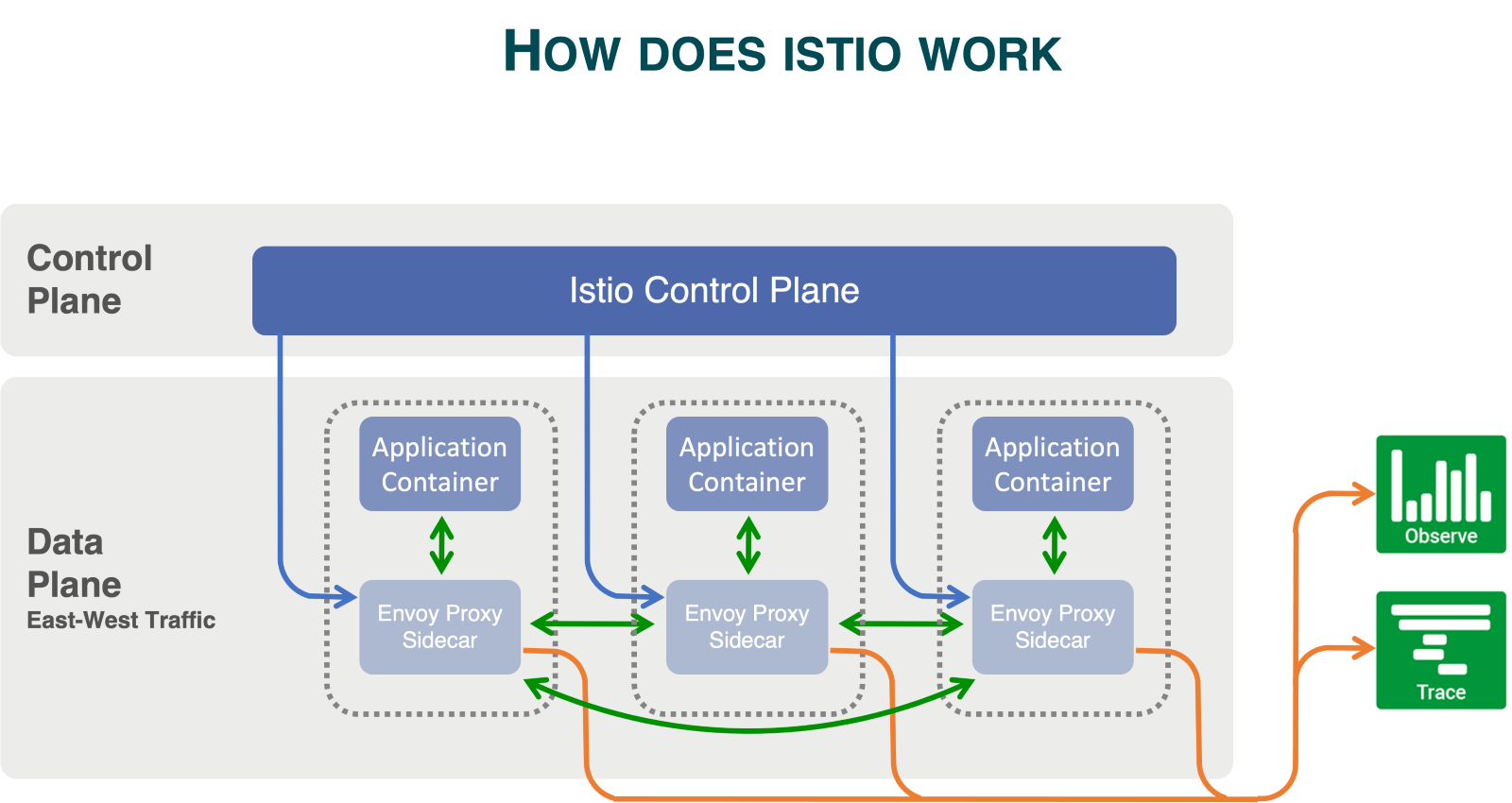

ColorTokens Xshield delivers a complete agentless solution to secure these microservices environments by integrating with service mesh technologies like ISTIO.

As discussed above, the unique nature of microservices, with their dynamic and transient characteristics, auto-scales Containers/pods frequently, and they typically communicate using HTTP/HTTPS protocols rather than static IPs and ports. This makes network-level security policies inadequate.

Securing communication at the API layer requires a proxy. Rather than relying on vendor-specific proxies, established solutions like service meshes offer a robust alternative that provides a proxy like envoy by default which is widely deployed and adopted across Kubernetes environments.

For benefits of ISTIO, please refer to Appendix B

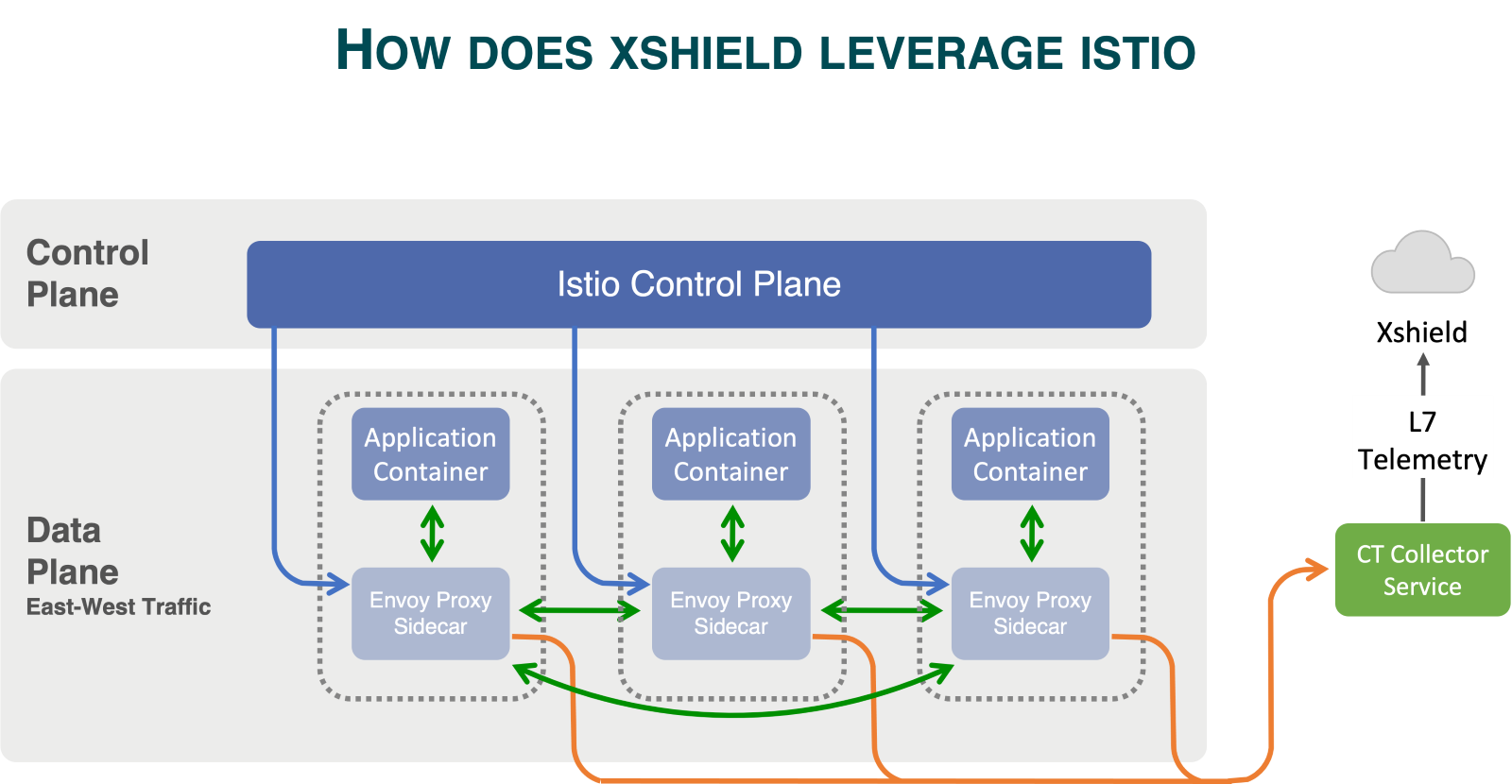

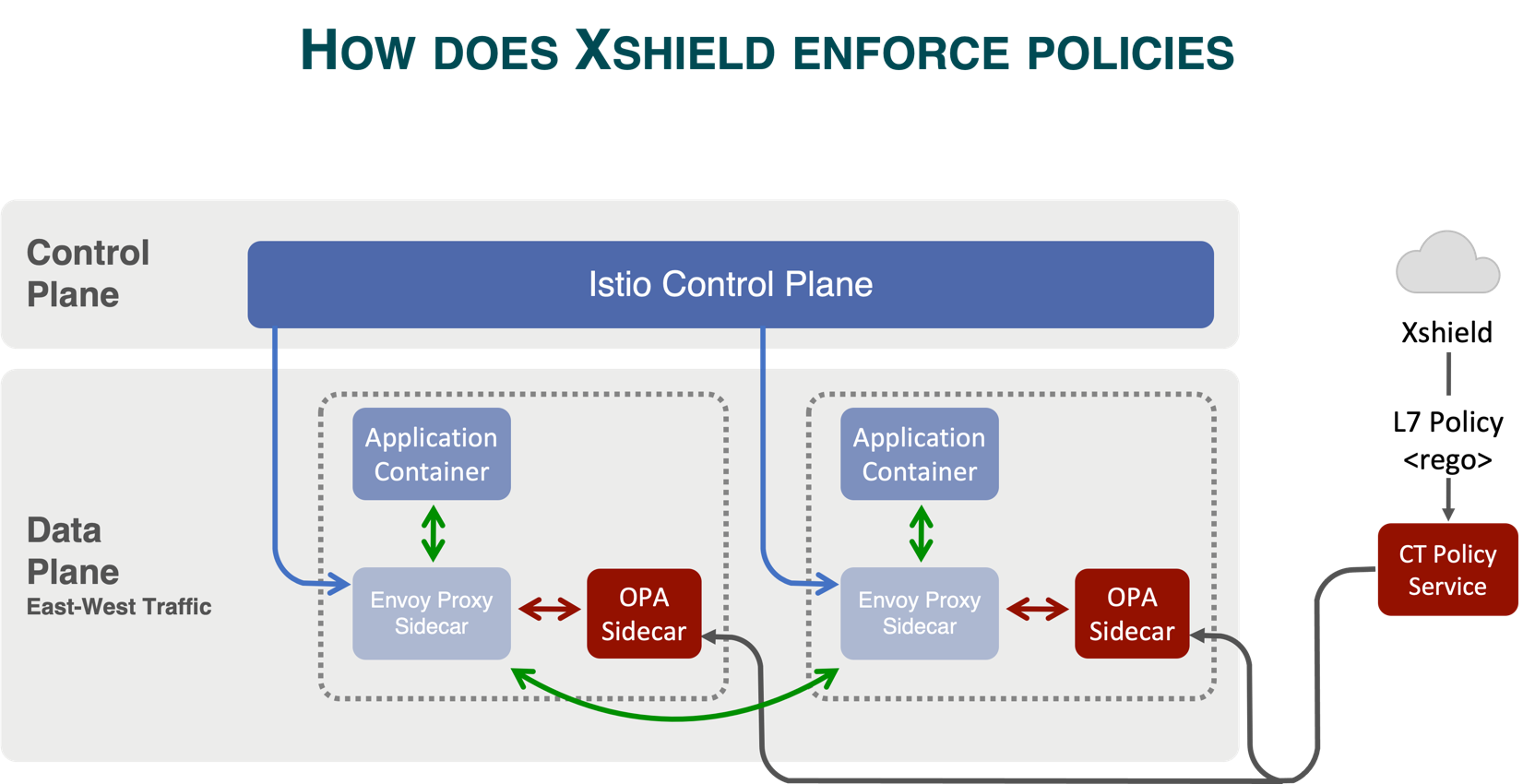

ColorTokens leverages Istio's proxy to provide micro-segmentation benefits at the API layer, ensuring that micro-service communications are effectively secured. By integrating with Istio, Xshield provides security at the application layer, ensuring that all microservices communications are encrypted and authenticated. This approach allows organizations to implement zero-trust security principles by monitoring and controlling the flow of data between services.

Let us now delve into the solution provided by ColorTokens, which brings all these components together for a comprehensive zero-trust security model.

ColorTokens Solution

ColorTokens Xshield offers a comprehensive solution for securing containerized environments, such as Kubernetes. It empowers organizations by providing visibility into microservice communication and enabling seamless policy enforcement across the entire infrastructure. With its agentless architecture and integration with service mesh technologies like Istio, Xshield simplifies micro-segmentation and ensures a robust security posture.

Complete Agentless Deployment

Xshield Container agentless solutions eliminate the need to deploy agents to secure the container environment. Xshield solution works with existing service mesh technologies like ISTIO and integrates with DevOps workflows like helm charts to rollout the solution. Xshield supports all enterprise deployment scenarios like managed and Unmanaged Kubernetes environments to secure the container environments.

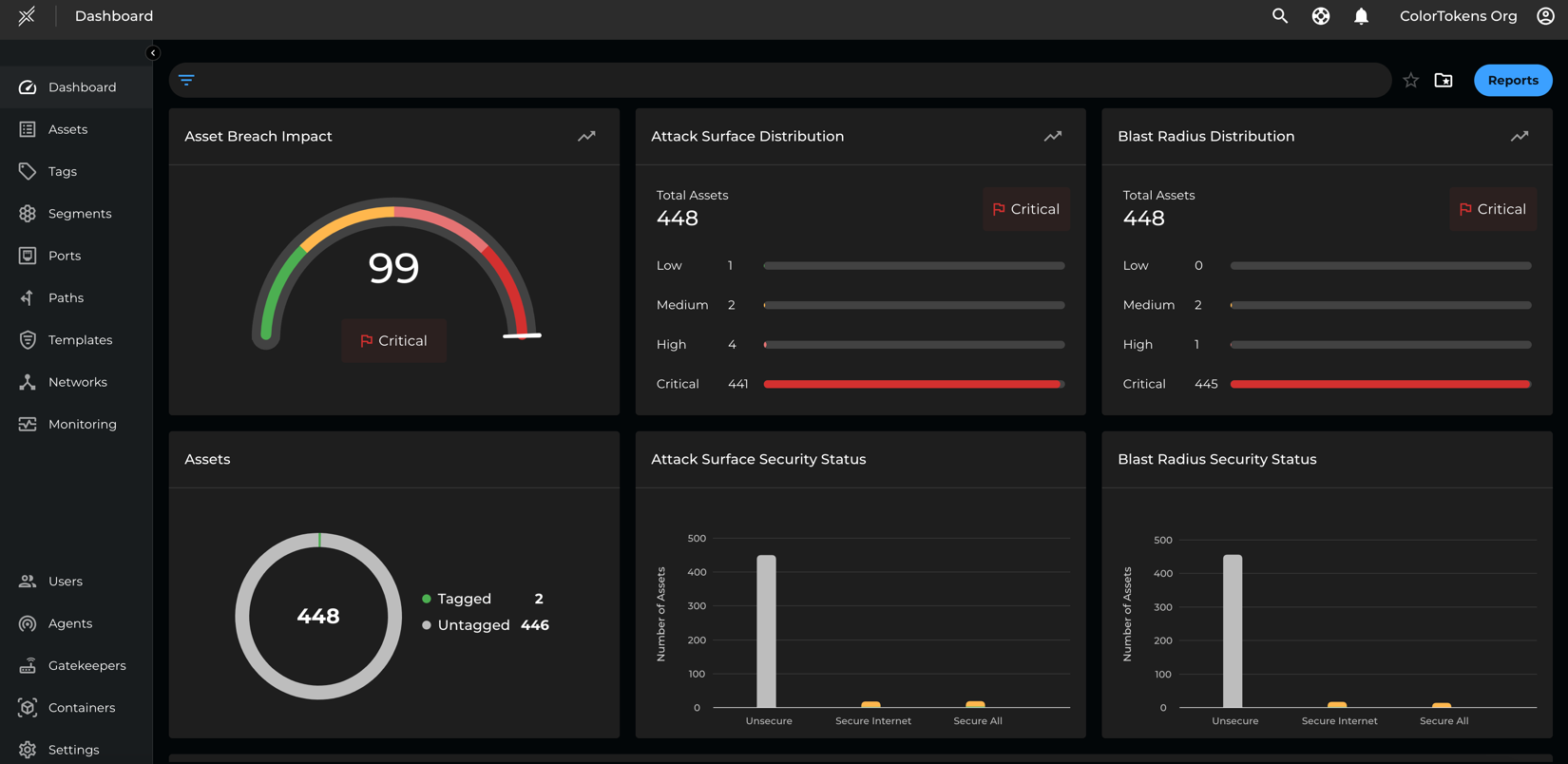

Visualization

Xshield's visualizer discovers all the microservices and their metadata, define logical business segments, and understand the communications between these services in multiple contexts like namespaces, services, clusters, applications, etc. While other Kubernetes visualization tools are primarily observability, Xshield will enable security teams to focus on understanding the breach impact risk of their microservices, define policies, and validate them to ensure there is no business disruption when policies are enforced.

Policy Management

Due to their design, microservices communicate differently from traditional applications. They use Application Programming Interfaces (APIs) rather than static IP addresses and ports. Therefore, conventional micro-segmentation policies based on IP addresses and ports are inadequate for protecting microservices. Instead, security policies must be applied at the API level, as microservices often use dynamic IPs and overlay networks, which traditional methods cannot address effectively.

The Xshield visualizer shows API-level communications between all micro-services and recommends policies that can be simulated and enforced to secure the communications. This will save time and manual effort for security teams to parse all the API logs between the services and author a unique policy using an external authorization service. Users can define reusable templates to secure communications between microservices and between microservices and non-microservices. Xshield leverages OPA (Open Policy Agent) to integrate with the Istio Envoy proxy to secure communication between microservices.

One of the key challenges in container environments is the handling of policy enforcement without any delay and performance issues as pods are short-lived and IP addresses are ephemeral. By integrating with Service Mesh, xshield will be able to handle policy enforcement at the service level so that the policies are not dependent on IP addresses and there is no impact or delay in the policy enforcement.

Securing Communications Between Microservices

Namespace Isolation

Xshield enhances Kubernetes' native namespace isolation by visualizing API-level communication and applying fine-grained security policies. This ensures that microservices in different environments (such as production and development) remain isolated, preventing unauthorized cross-namespace communication.

Application Ring-Fencing

Xshield implements ring-fencing using Istio and OPA to prevent unauthorized communication between applications, reducing the risk of lateral movement during a security breach.

Securing Communications Between Microservices and Non-KubernetesEnvironments

Xshield extends its security capabilities beyond Kubernetes environments by supporting secure communication between Kubernetes microservices and external systems (e.g., databases, PaaS services).

Conclusion

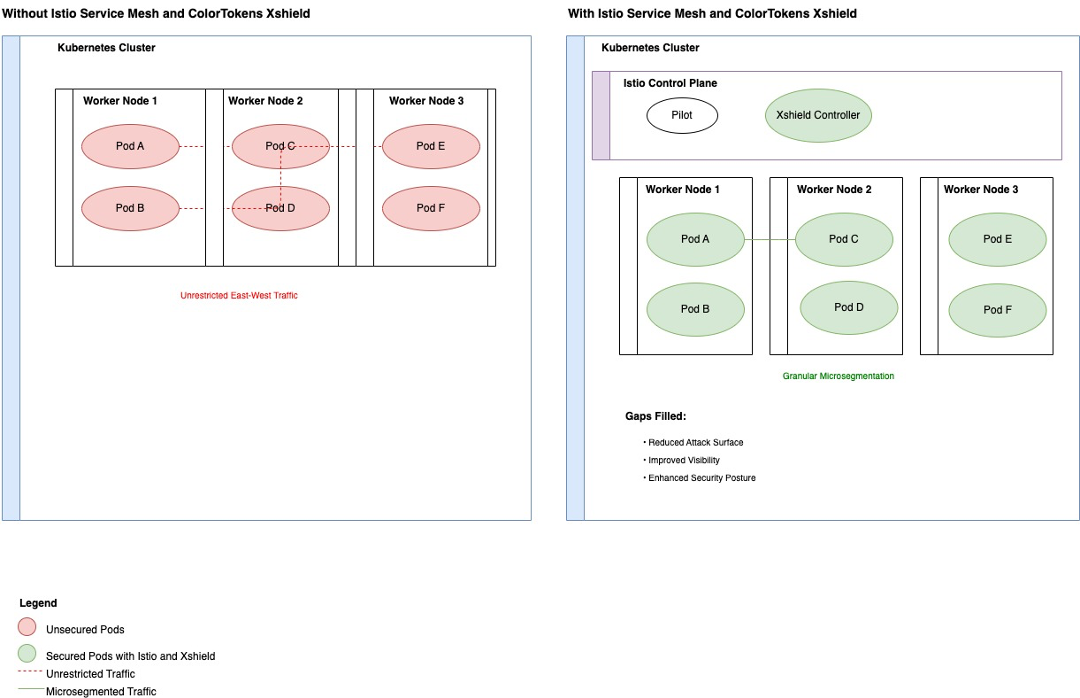

The image below illustrates a gap analysis comparing a Kubernetes cluster setup with and without Istio Service Mesh and ColorTokens Xshield.

On the left, a Kubernetes cluster without Istio and Xshield reveals vulnerabilities due to unsupervised traffic between pods. In this setup, intra-pod traffic is unmonitored, posing significant security risks.

On the right, a Kubernetes cluster with Istio and ColorTokens Xshield shows how enhanced security is achieved. The Istio control plane manages east-west traffic between pods, while the Xshield controller monitors and secures pod communication, ensuring improved visibility and policy enforcement. Xshield addresses key gaps such as unmanaged intra-pod traffic, filling security gaps, and ensuring compliance and micro-segmentation.

Key gaps filled by Xshield include:

- Protected and monitored traffic

- Improved visibility

- Enhanced security posture across pods and nodes

Overall, ColorTokens Xshield integrates with Istio for traffic visibility and Open Policy Agent (OPA) for granular API-level policy enforcement, delivering a comprehensive security solution across both Kubernetes and non-Kubernetes environments.

Appendix A -- Kubernetes Infrastructure

Containers

Containers emerged as a solution to the inefficiencies associated with virtual machines (VMs), which often included unnecessary components for simpler applications. A VM typically includes an entire operating system (OS), even for tasks as basic as running a single application, leading to resource overhead. In contrast, containers encapsulate only the application, necessary libraries, and supporting binaries, operating within the kernel of the host OS, managed by a container engine (e.g., Docker, containerd). This setup reduces overhead and boosts performance by sharing the host OS kernel, making containers ideal for scalable and efficient deployments, particularly within service mesh and Kubernetes environments.

Container Orchestration

While individual containers can be managed manually, the scaling and management of containerized applications require robust orchestration tools. Early solutions like Docker Swarm helped with basic orchestration, but cloud-native services such as AWS Fargate and Azure Container Instances offer improved serverless container management. However, for large-scale, complex deployments, these tools may fall short in providing comprehensive lifecycle management.

Kubernetes (K8s) has become the de facto standard for container orchestration due to its extensive capabilities in managing container lifecycles, including automated deployment, scaling, self-healing (restarting failed containers), and rollback. Although Kubernetes can be more resource-intensive and complex than simpler alternatives, its flexibility and features make it the preferred choice for production-scale workloads.

Kubernetes Architecture

Kubernetes architecture is composed of two main components:

Control Plane

This component is responsible for managing the overall state of the cluster, making decisions about scheduling and orchestrating containers, and managing the lifecycle of workloads. It includes components like the API server, etc. (for cluster state storage), controller manager, and scheduler.

Worker Nodes

These are the machines (physical or virtual) where the actual application containers run. Each worker node runs a container runtime (e.g., Docker), along with components like kubelet (which communicates with the control plane) and kube-proxy (which manages networking for the node).

Kubernetes can be deployed in two modes:

Managed Kubernetes

In this model, the cloud provider (e.g., AWS, Google Cloud, Azure) manages the control plane components, including scaling, availability, and maintenance. The user is responsible for managing the worker nodes and the workload running on them.

Unmanaged Kubernetes

Here, the user is responsible for managing both the control plane and the worker nodes. This provides greater flexibility and control over the cluster but requires more effort in terms of maintenance, upgrades, and scaling.

Pods and Networking

A fundamental Kubernetes concept is the Pod, which is the smallest deployable unit in Kubernetes. A pod groups one or more tightly coupled containers that share storage volumes and network resources. Pods are typically used to run applications where containers need to work together, such as when separate containers handle different tasks like logging, application code, and sidecar services (e.g., proxies).

Networking between pods is managed through the Container Network Interface (CNI), which allows network configuration for containers. CNI plugins like Calico, Flannel, and Cilium provide inter-pod communication by creating an overlay or routing network, ensuring that each pod gets its own IP address and can communicate seamlessly across nodes in a cluster.

Kubernetes Service

A Kubernetes Service defines a logical set of pods and a policy for accessing them. It acts as an abstraction that decouples the front-end access from the dynamic backend pods. The service maintains a stable IP address and DNS name for the set of pods, even as they scale or are replaced. This setup enables network access, load balancing, and service discovery for Kubernetes workloads.

Kubernetes supports different types of services for exposing applications:

ClusterIP

Exposes the service internally within the cluster. This is the default service type.

LoadBalancer

Creates an external load balancer (with support from the cloud provider) to distribute traffic to the service.

NodePort

Exposes the service on a specific port of the worker nodes, making it accessible externally.

Namespaces

Kubernetes uses namespaces to logically separate and group resources within a cluster. Namespaces allow teams to isolate environments (e.g., development, staging, production) and apply different management, security, and access controls across them. Each namespace can have its own policies, quotas, and network configurations, ensuring more manageable and secure multi-tenant environments within the same cluster.

Appendix B -- Benefits of the Istio Service Mesh

Istio service mesh has the following benefits:

- Automated Service Discovery: Automatically detects and connects services in dynamic environments like Kubernetes, eliminating the need for manual configuration.

- Enhanced Security with mTLS: Encrypts traffic between services to ensure secure and authenticated communication.

- Circuit Breaking: Protects services from overloads by automatically stopping requests to a failing service and redirecting traffic to healthy instances, improving overall system reliability and fault tolerance.

- Zero-Trust Architecture: Enforces strong service identities and access controls, making sure every interaction is authenticated and authorized.

- Network Segmentation: Implements policies to prevent unauthorized east-west traffic between services, thereby reducing the attack surface.

- Advanced Telemetry and Metrics: Collects and provides insights into metrics such as latency, traffic volumes, and error rates, enhancing visibility into service communication.

- Multi-Cluster Management: Simplifies the management of services across multiple clusters, facilitating scalable microservices deployments in cloud and on-premises environments.

- Unified Hybrid Cloud Management: Offers a consistent framework for managing services across hybrid cloud environments, ensuring uniform policies and visibility.

- Traffic Management: Includes advanced routing, load balancing, retries, and failovers for improved service resilience.

- Policy Enforcement: Provides fine-grained policy management for rate limiting, quota management, and other custom rules.

- Fault Injection: Allows testing the resilience of services by injecting faults and simulating failures.

- Safe Deployment Strategies: Supports canary releases and other progressive deployment techniques, allowing for controlled testing of new service versions.